If you are a user of Seapine Software’s TestTrack® tool, the work you do in your software development projects generally follows some sort of pre-defined workflow. The elapsed time for your project to traverse this workflow will include the reported actual time spent doing work as well as other time for such things as waiting for project resources to become available, time spent doing other, non-project-related work, and elsewhere. For you to better evaluate your software project’s progress, you need to capture and evaluate how much of your project’s time is going toward reported actual time doing work and how much is going toward other time. With this information you can assess the components and sources of this unattributed time and work toward better managing its impact on your project’s progress.

In this article, we will look at the elapsed time to complete a TestTrack-managed software development task in terms of both the reported actual time performing work and other time, define some TestTrack metrics you may use to assess time associated with these tasks, and provide examples of TestTrack’s Live Chart graphical reports you may generate to display and act on this information.

Actual vs. Elapsed Time

Actual time is user-reported time spent on workflow events for a TestTrack item. TestTrack’s configurable built-in Actual Hours time-tracking field captures and reports this time. In the article Create Customized TestTrack Metrics to Better Manage Software Projects, we looked at how you can configure TestTrack workflow events to include time-tracking fields for the actual time to work on a TestTrack item such as a Feature Implementation, Change Request or Defect.

Elapsed time is clock-time measured from the creation of a TestTrack item until its completion (for an item that is closed), or until the current time (for an item that is open). For a closed item, the elapsed time associated with the item will remain unchanged unless the item is subsequently re-opened. For an open item, the elapsed time associated with the item will continue to change as long as the item is open. This elapsed time associated with an item includes the actual time spent doing work on the item as well as all other time for such things as waiting for project resources to become available or time spent elsewhere doing other, non-project-related work.

You can improve management of software projects by looking at how much of the elapsed time it takes to complete software project items is accounted for by the actual time recorded to do the associated work. Significant differences between the actual (reported) and the elapsed (clock) times may require further exploration into the underlying root causes for these differences. You can define simple TestTrack customized metrics to help you do this.

Elapsed Time Metrics

For a TestTrack item (ex., Feature Implementation), we can define the following metrics to describe elapsed time relationships. Elapsed time may be reported in any time units that work best for your project. Since TestTrack reports actual time in hours, for ease of comparison, report elapsed time and other time in hours.

Elapsed Hours = Item Closed Date – Item Created Date (closed items)

Elapsed Hours = Current Date – Item Created Date (open items)

Other Elapsed Hours = Elapsed Hours – Actual Hours

Depending on how you have defined and configured your workflow, you may want to define some other starting point for elapsed time calculation other than an item’s creation date. For example, if your workflow calls for candidate project items to be created and placed in a “parking lot” folder for subsequent review for acceptance or rejection, you may choose to exclude “parking lot” folder time from elapsed time calculation by defining some specific project event to represent your desired elapsed time start point.

Similarly, if your project workflow allows for closed items to be re-opened and undergo additional work (e.g., rework), you may choose to back out from your elapsed time calculation the clock time the item was closed until it was re-opened. In the article Use TestTrack Metrics to Measure and Manage Software Project Rework, we looked at how you can create TestTrack metrics to characterize your software project workflow in terms of the original work and any subsequent rework to implement a new feature, change an existing feature, or fix a feature defect.

For projects following an Agile methodology, you can use TestTrack’s custom fields feature to define a Story_Points field where users may enter their Story Points estimates. You can also define a calculated metric field to assess how the elapsed time to complete a User Story compares to the User Story’s original Story Point level-of-effort estimate. We will define this metric as follows:

Elapsed Hours/Story Point Ratio = (Elapsed Hours) / (Story Points)

In the article Add Story Points Metrics To Your Agile Software Project Toolbox, I described how to set up a custom Story Points field and calculated custom Story Points-based metrics.

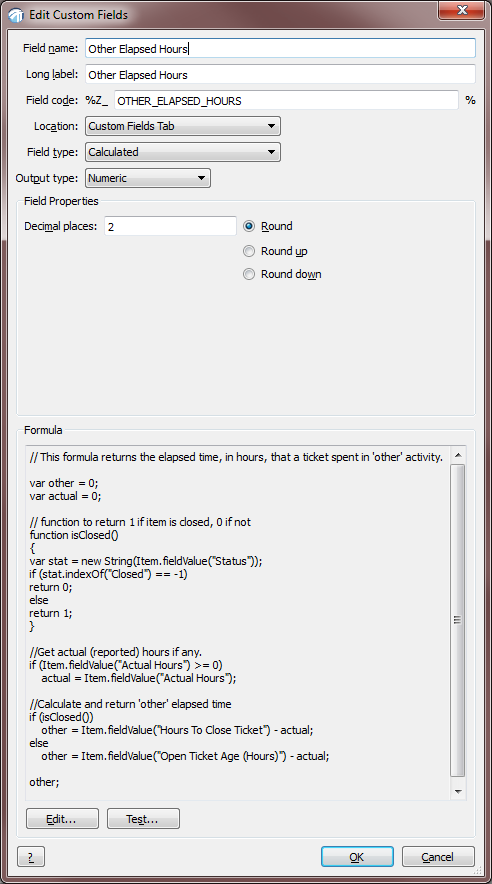

TestTrack calculated custom field formulas use the ECMAScript language, which is a subset of JavaScript. If you are not familiar with ECMAScript, refer to the ECMA-262 Standard Language Specification. You may also find descriptions of these formulas in Appendix F: Calculated Custom Field Functions, in the TestTrack User Guide (Version 2013.1 or later), or in TestTrack 2014 online Help topic: Reference: Calculated Custom Field Functions.

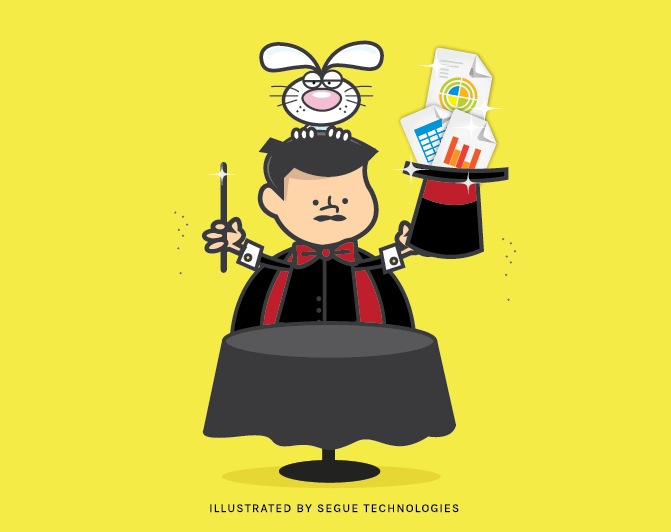

Example: TestTrack custom field definition for Elapsed Hours for a closed item

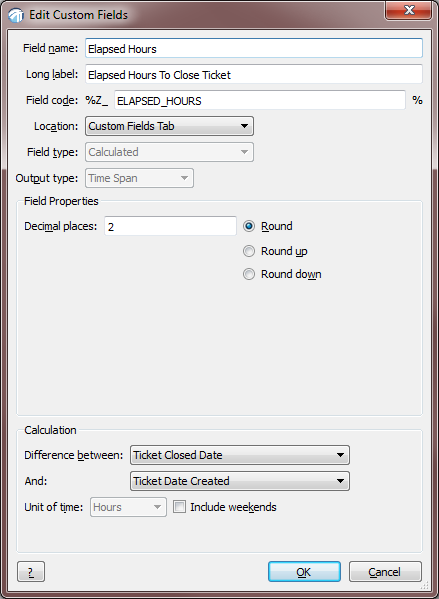

Example: TestTrack custom calculated field definition for Elapsed Hours for an open item

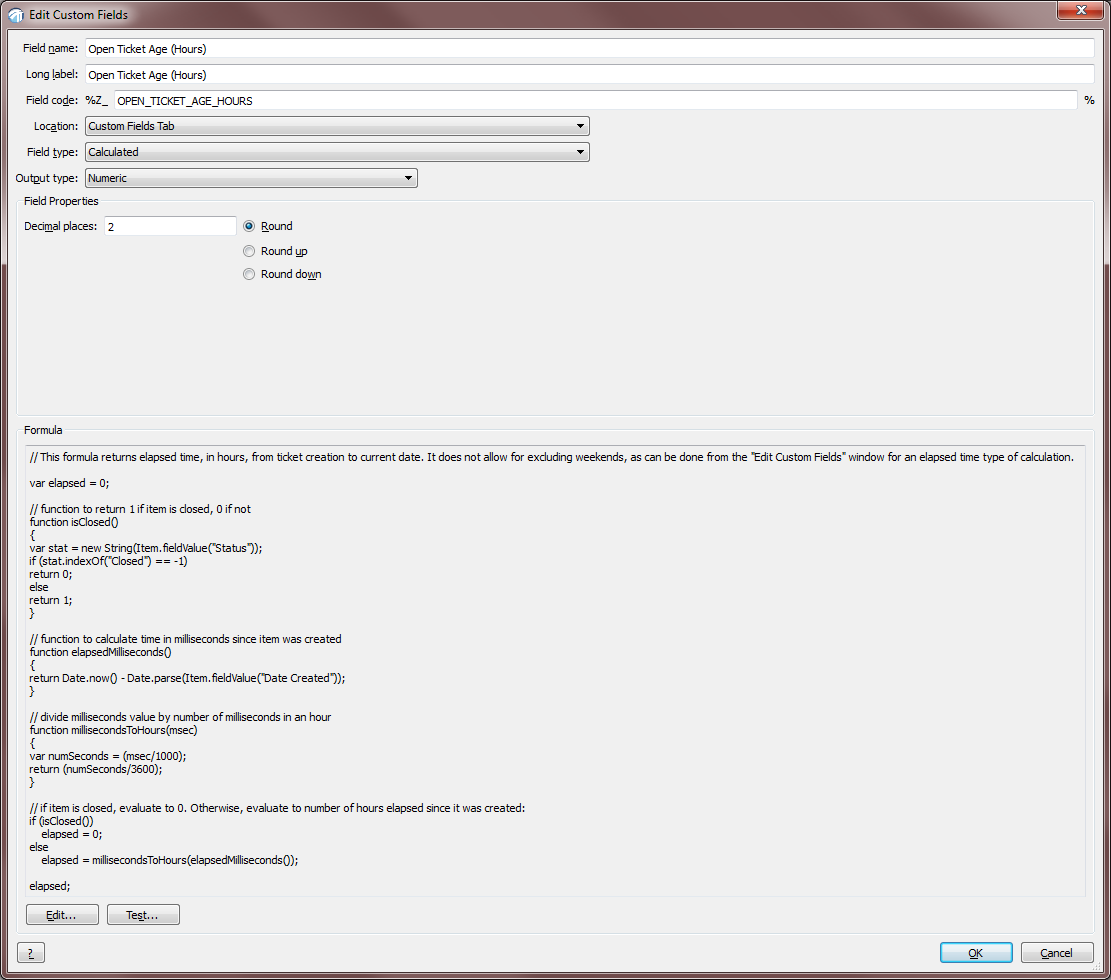

Example: TestTrack custom field definition for Elapsed Hours/Story Point Ratio metric

Example: TestTrack custom field definition for Other Elapsed Hours metric

Live Chart Reports

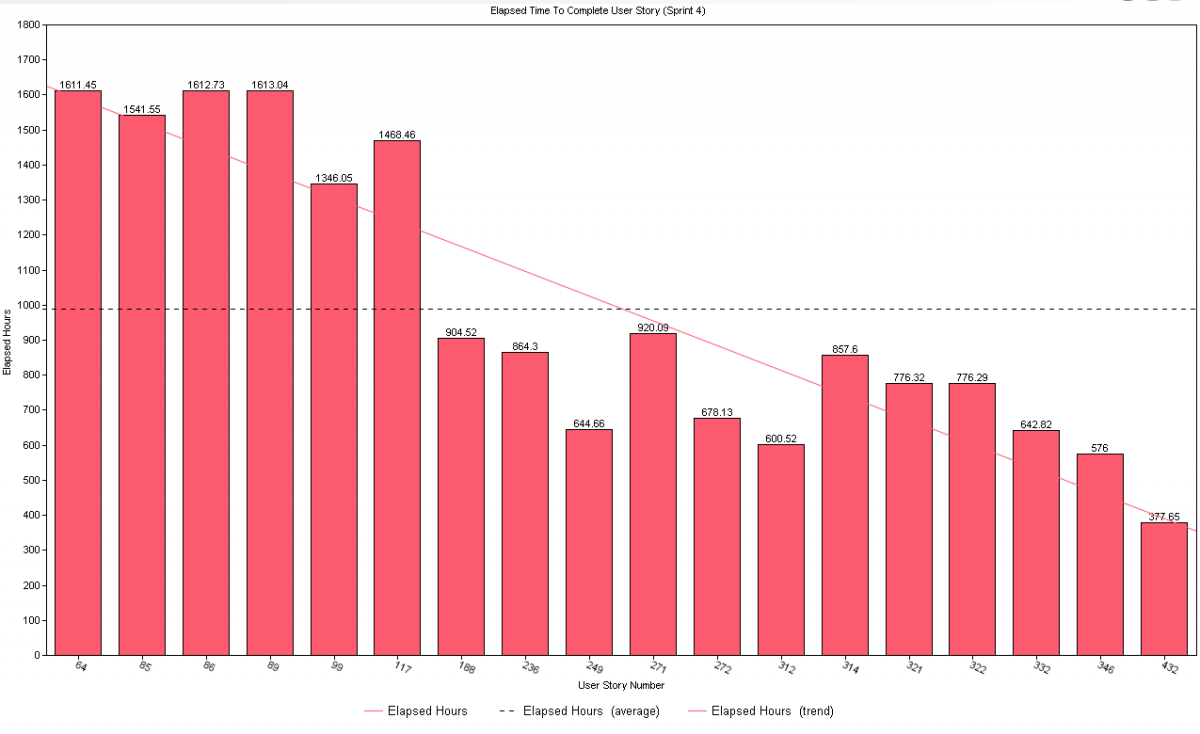

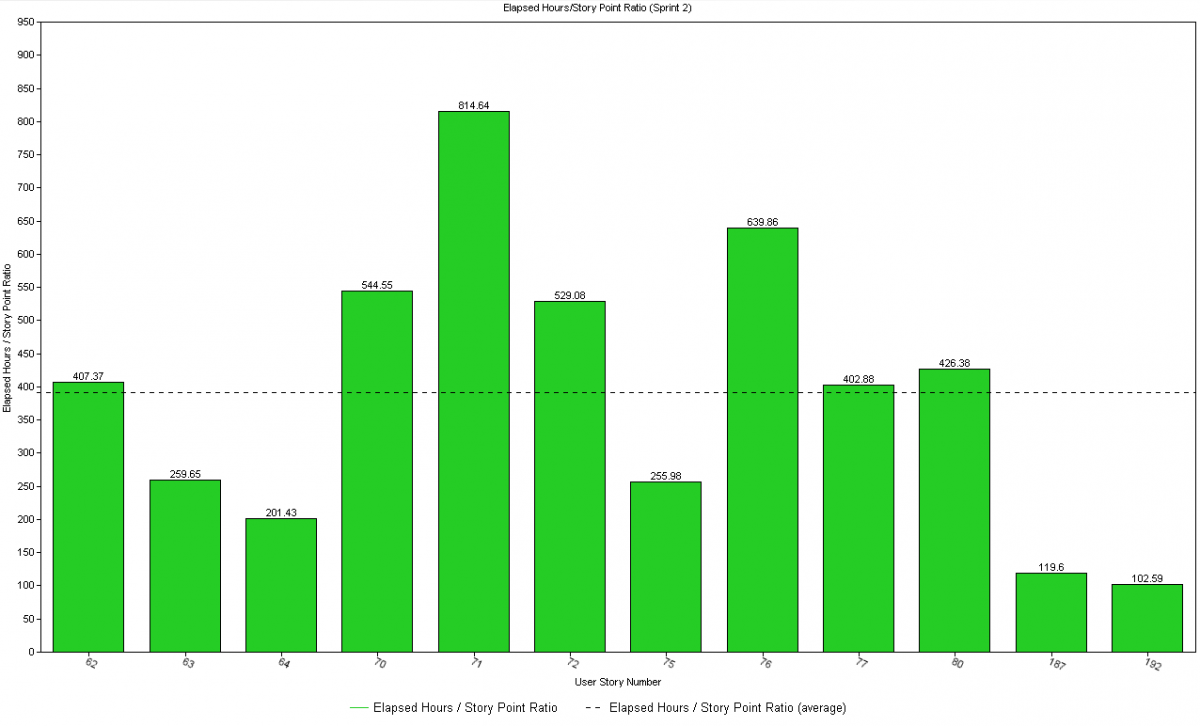

With these elapsed time metrics in place, you can now generate Live Chart (or other) reports to examine how reported actual work time and Story Point estimates relate to the elapsed time a project item was open.

The following Live Chart displays the elapsed times to complete User Stories for a Sprint, as well as the average elapsed time for these User Stories. On average, User Stories in this Sprint required slightly less than 1000 hours to complete. Additionally, as the Sprint progresses, the elapsed time to complete a User Story shows a downward trend.

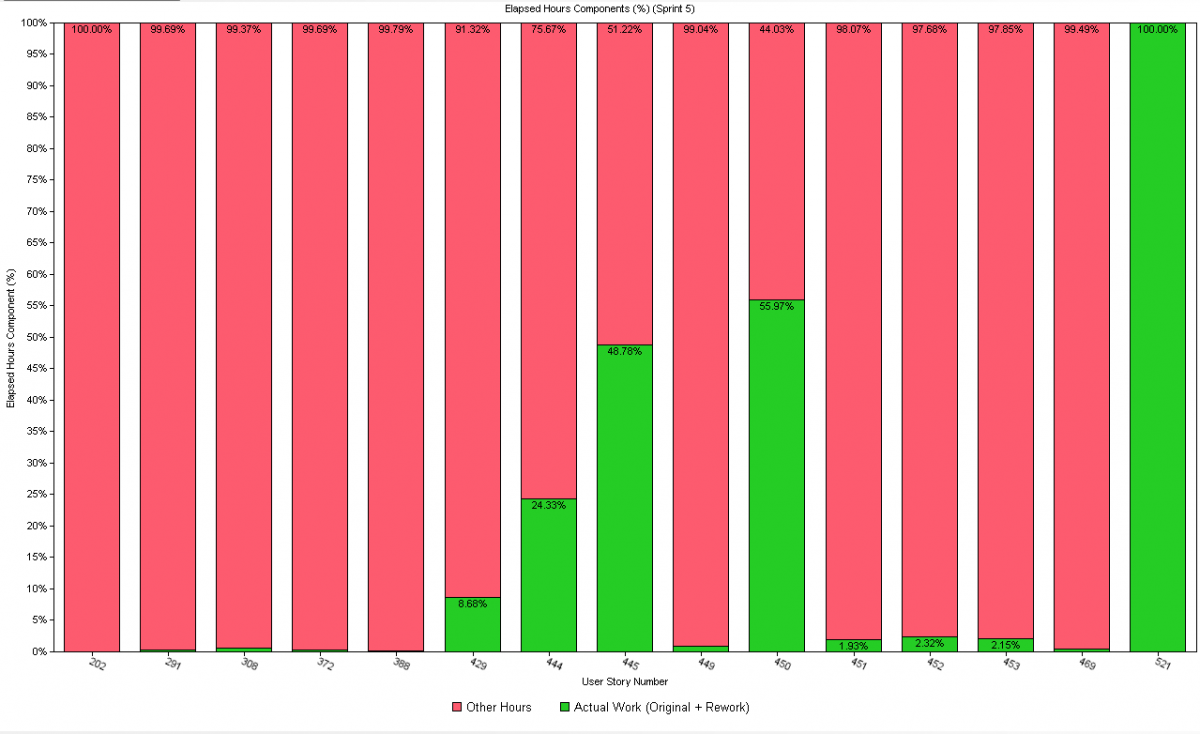

The next Live Chart report displays the percentage of the elapsed time associated with User Stories in a Sprint in terms of reported actual work time and other time. The User Stories in this Sprint show anywhere from no (# 202) to 100% (# 521) reported work time. For User Stories with a high percentage of their elapsed time attributed to other time, it may be appropriate for additional forensic review.

The next Live Chart report displays the percentage of the elapsed time associated with User Stories in a Sprint in terms of reported actual work time and other time. The User Stories in this Sprint show anywhere from no (# 202) to 100% (# 521) reported work time. For User Stories with a high percentage of their elapsed time attributed to other time, it may be appropriate for additional forensic review.

The last Live Chart displays the Elapsed Hours/Story Point Ratio for User Stories in a Sprint, as well as the average value for this metric. Overall, User Stores in this Sprint required slightly less than 400 elapsed clock hours to complete, but there was a wide range of times for these Stories. This level of performance must be judged in terms of the Sprint’s scope, composition, and duration. Perhaps larger User Stories should have been broken down into smaller units.

We have looked at just a few of the ways you can customize TestTrack to help you better manage your software projects. You now have metrics to monitor and manage project effort and duration in terms of the recorded actual work effort, elapsed clock-time and associated Story Points. You have seen how you can create effective Live Chart reports to display this information for review and assessment.

There is much more you can do to tailor TestTrack to better serve your project management information needs. If you are not sure where to begin, contact us at Segue Technologies and we can work with you. Don’t hesitate: get started now!